A story about a novice SRE's experience attending a HashiCorp Meetup

table of contents

- 1 ■ Bullet points of impressions

- 2 ■ HashiCorp Products: Active in AI and analytical platforms!

- 3 ■ HashiCorp software that supports container infrastructure

- 3.1 Lollipop! Managed Cloud

- 3.2 Why develop and use your own?

- 3.3 Overall Features

- 3.4 Terraform

- 3.5 Consul

- 3.6 vault

- 3.7 PKI RootCA distribution issue

- 3.8 Using Console Template 2

- 3.9 Application Token Distribution Issues

- 3.10 Solution to the Application Token distribution problem

- 3.11 Vault Token expired and caused a problem

- 3.12 Impressions

- 4 ■Terraform/packer supports applibot's DevOps

- 5 ■tfnotify - Show Terraform execution plan beautifully on GitHub

- 6 About dwango's use of HashiCorp OSS

My name is Teraoka and I'm an infrastructure engineer.

I'm part of the SRE team at our company, and I'm responsible for all aspects of infrastructure design, construction, and operation.

While SRE generally stands for "Site Reliability Engineering,"

we refer to it as "Service Reliability Engineering" because we look at the entire service, not just the site

This time, I'll be talking about my experience attending a HashiCorp Meetup.

The venue was Shibuya Hikarie in Tokyo, where we learned

about the much-talked-about HashiCorp tools that support DevOps, It's about a three-hour Shinkansen ride from Osaka, so it's pretty far...

I'm secretly looking forward to the next one being held in Osaka!

■ Bullet points of impressions

- Prosciutto and beer are the best

- Mitchell Hashimoto loves puns (he gave away chopsticks as a gift in reference to HashiCorp)

- Approximately 80% of people use Terraform

- Packers account for approximately 40%

- It seems like not many people are using Cousul/vault/nomad yet

- I'm interested in Consul Connect (which can encrypt communication between services)

- I would like to try using sentinel (Configuration as Code), so I hope it will be released as open source!

DeNA provided us with prosciutto and beer, which were delicious

We also received a whole ham as a gift! pic.twitter.com/gkvdEB20zK

— HashiCorp Japan (@hashicorpjp) September 12, 2018

Mitchell Hashimoto's talk about puns was interesting. He was

so true to his word, handing out chopsticks at the venue, I was impressed.

On the technology side, Terraform is incredibly popular.

When asked if anyone has used it, almost everyone raised their hand.

I'm only about halfway through Packer, but

it's a best practice tool for managing machine images,

so you should get used to it.

Our company only uses Terraform/Packer in some projects, so we thought we

first set up a system by modularizing and standardizing the code, and

then start by verifying the introduction of Consul for tools that we haven't yet adopted...

So, I'll write about what the speakers said.

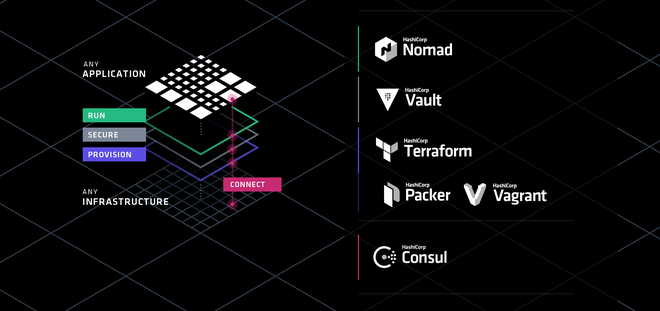

■ HashiCorp Products: Active in AI and analytical platforms!

DeNA Co., Ltd. System Headquarters AI Systems Department Mr. Hidenori Matsuki

・Responsible for the infrastructure of AI and analytics platforms

. ・Building and operating infrastructure that allows AI R&D engineers to easily and freely develop.

・Resolving issues with AI and analytics platform infrastructure using HashiCorp tools.

AI Infrastructure

There are multiple small AI projects with 2-3 people each.

Each project uses AWS and GCP and handles highly confidential data and huge amounts of data, so

it is necessary to quickly set up infrastructure for each platform.

Resolving AI infrastructure issues

・Use of Terraform

・Use of Terraform as an infrastructure provisioning tool

・Instances are launched with Terraform, and middleware is installed

with itamae ・Instances can now be easily built using tools

・Launch GPU-equipped instances to build machine learning infrastructure

・Using Packer (proposal)

・Promoting Dockerization of AI infrastructure

・Want to use Packer to manage it in AWS/GCP Docker repositories

・Want to write Packer in HCL as well (I couldn't help but nod in agreement because this makes sense...)

Analytics Infrastructure

・Collect service logs with fluentd

・Analyze the collected logs with Hadoop

・Input the results into BigQuery with embulk

・Visualize from ArgusDashboard

・Hadoop version upgrades are difficult...

・Even verifying the analysis infrastructure is difficult in the first place

Resolving analytical infrastructure issues

・Testing the analysis infrastructure with Vagrant!

・But it's still hard... (Apparently, it's still an unfinished project)

Impressions

・I thought that the multi-provider Terraform was a perfect fit for our requirements.

・We both agreed that we didn't use the Terraform provisioner (although our company uses ansible, not itamae).

・I completely agreed with the part about wanting to write Packer in HCL.

■ HashiCorp software that supports container infrastructure

Mr. Tomohiro Oda, Principal Engineer, Technology Infrastructure Team, Technology Department, GMO Pepabo, Inc

Lollipop! Managed Cloud

・Container-based PaaS

・Operated cloud-based rental server

・Dynamic scaling according to container load

・No k8s or Docker used

・Uses proprietary container environments called Oohori/FastContainer/Haconiwa

・All proprietary development (developed in golang)

Why develop and use your own?

- High density of containers is necessary to provide an inexpensive container environment.

- User-managed containers need to be continuously secure.

- Container resources and permissions need to be flexibly configurable and active (the container itself dynamically changes resources). -

In short, these were necessary to meet the requirements!

Overall Features

・A hybrid environment of OpenStack and Baremetal

・Images created with Packer are kept to a minimum and used in common for all roles

・Knife-Zero is used instead of Terraform Provisioner

・The development environment is Vagrant

・A strategy of abandoning Immutable Infrastructure due to frequent major specification changes and the large number of stateless roles

Terraform

・We try to make it possible to reuse modules as much as possible

. ・tfstate is managed in S3.

・Workspace is used for Production and Staging.

・Lifecycle settings are essential to prevent accidents.

・We try to use fmt in CI.

Consul

・Mainly service discovery

・Role is divided with Mackerel depending on whether it is external monitoring or service monitoring

・Name resolution is done using Consul's internal DNS and Unbound

・Also used as a storage backend for Vault

・All nodes have ConsulAgent/Prometheus's Consul/node/blackbox exporter installed

・Name resolution with Consul on the jump server makes multi-stage SSH convenient

・Proxy upstream can be round-robined using Consul DNS

vault

・Uses PKI and Teansit secrets

・All confidential information stored in the DB is encrypted with Vault

・Issued confidential information is distributed with Chef

・Tokens are used while extending the TTL

・Expires at the max-lease-ttl expiration date

PKI RootCA distribution issue

What to do when connecting to a Vault server via TLS

: ・When using Consul as storage, vault.service.consul is automatically configured for the active Vault.

・Vault is configured as a certificate authority and a server certificate is issued by the user.

・Vault is sealed when it is restarted.

Using Console Template 2

・Reloads server certificates with SIGHUP signal to vault

・SIGHUP does not seal

・Can also be used for logrotate of audit logs

・Root CA issued by vault is distributed by Chef

Application Token Distribution Issues

・An approle is an application that authenticates against Vault.

・Once authenticated, a token with a specified TTL is issued.

・Applications communicate with Vault using tokens.

・It is meaningless for an application to have the approle's role_id and secret_id.

・Tokens are issued outside the application process.

Solution to the Application Token distribution problem

・When deploying the application, execute the command to perform approle auth

. ・The command POSTs the TTL extension of the secret_id, which is the authentication password.

・Then execute the authentication and obtain a token.

・Place the obtained token in a path that the application can read.

Vault Token expired and caused a problem

・Set as custom secret_id to renew Approle's secret_id

・Token set to custom secret_id is issued with auth/token

・There is a setting called max-lease-ttl for each mounted secret, and there is also a max ttl for the entire system

・If neither is set, the upper limit is the system's max ttl of 32 days

・When the token expired, 403 errors frequently occurred in audit.log

・Added monitoring of audit.log with Consul checks on the Vault server

・Detected errors are notified to Slack with Consul Alert

Impressions

・It's amazing that they developed it independently without using k8s or Docker.

・Many companies don't use Terraform's Provisioner and instead use other configuration management tools.

・It's stable to write the backend for tfstate and manage it in S3.

・I don't usually use lifecycle descriptions, so I thought I needed to learn them.

・I'm in charge of multi-level SSH from a jumping-off point, so I'd like to try Consul.

・To be honest, I don't really understand vault...I haven't studied it enough, so I'm relearning it...

■Terraform/packer supports applibot's DevOps

System Operations Engineer, Planning and Development Division, Applibot Inc. (CyberAgent Group)

・Teams = Company structure for each application

・There are only two SYSOPs (people who prepare the server environment)!

・SYSOPs often work with server engineers on the application team

・A story about how we solved past issues using terraform/packer

Case 1: Image creation

・Uses RUNDECK/Ansible/Packer

・Creates machine images in conjunction with Ansible

・Advantages: The image creation process can be templated

Before

: Launching EC2 from AMI

, applying configuration changes with Ansible

, and deleting the imaged server

. All of these steps were performed manually.

・After

・packer build command

Case 02 Load test construction

・Uses terraform/hubot

・SYSOP builds, changes configuration, and reviews the load testing environment

・Infrastructure preparations

・Finally, an environment equivalent

・Created at the time of testing

・Maintaining it at the same scale as production is costly, so create it for each test

・Keep it small when not in use, even during the test period

Server to apply load

・Use Jmeter, separate network and account.

・Master-slave configuration. Slaves are scaled with AutoScaling

・Created for each test, deleted when not in use

・Various monitoring

・Grafana/Prometheus/Kibana+Elasticsearch

Before

: Execute awscli for the managed instances

. Adjust the startup order with a script

. The secret sauce is created

. Sysop handles expansion and contraction every morning and night.

・After

・All components are converted to terraform

・Existing resources are handled with terraform import

・If there is any consistency, modify tfstate directly

・Startup management can be consolidated

・The configuration can be understood by looking at the terraform code

・Both creation and scaling can be performed with terraform apply

・The order of configuration is specified with depends_on

・Infrastructure scaling is done by switching vars

・Everything can now be completed with just Slack notifications

Case 3: Building a new environment

・Use terraform/gitlab

・Before

・Sealing the root account

・Configuring Consolidated Billing

・Creating an IAMUser, group, and switch

・Creating an S3 bucket for CloudTrail/log

・Network settings, opening ports for monitoring

・After

・You can now manage the settings of the account itself

・Seal the root account

・Configure Consolidated Billing

・Create an IAM user for terraform

・Just run terraform

・Configuration changes can also be managed with MergeRequest

・It is important to create templates for routine tasks!!

Impressions

・Creating machine images with Packer + Ansible is really convenient

. ・While creating machine images with Packer, you can manage the configuration of the commands to be executed with Ansible

. ・In addition to Ansible, Packer can also execute shell scripts

. ・Our company also uses terraform to build load testing environments, so this was helpful.

・We haven't used chat notifications using hubot, so we'd like to try that.

・It seems that all the processes required when building a new environment have been modularized, so this was very helpful.

■tfnotify - Show Terraform execution plan beautifully on GitHub

b4b4r07, Software Engineer at Mercari, Inc

What is tfnotify?

・A CLI tool written in Go

・Parses the results of Terraform execution and notifies any destination

・Used by piping terraform apply | tfnotify apply

Why is it necessary?

・Terraform is used in the microservices area.

・From an ownership perspective, review merges should be done by each microservices team, but there are cases where the platform team would like it to be reviewed.

・The platform/microservices team understands the importance of IaC and wants to get into the habit of coding in Terraform and checking the execution plan every time.

・They want to avoid the hassle of having to go to the CI screen (they want to be able to check it quickly on GitHub).

Hashicorp tools on Mercari

・There are over 70 microservices

. ・All microservices and their platform infrastructure are built using Terraform

. ・Developers are also encouraged to practice IaC.

・There are 140 tfstates

. ・All Terraform code is managed in one central repository.

Repository Configuration

・Separate directories for each MicroService

・Separate tfstate for each Service

・Separate files for each Resource

・Delegate authority with CODEOWNERS

Delegation of authority

・GitHub features

・Merges cannot be made until approved by the people listed in CODEOWNERS

・Changes cannot be made without permission

Implementing tfnotify

・Uses io.TeeReader

・Terraform execution results are structured using a custom parser

・Post messages can be written in Go templates

・Configuration is stored in YAML

Impressions

- I was managing Terraform in a central repository and was wondering how to manage code with Git, so this was helpful.

- It would be even better if tfnotify supported GitlabCI and Chatwork.

- It's important for developers to practice IaC, and it's important to carefully check Terraform execution plans every time.

- I'd like to try using Github's CODEOWNERS feature (I want to migrate to github first...)

About dwango's use of HashiCorp OSS

Eigo Suzuki, Dwango Co., Ltd., Second Service Development Division, Dwango Cloud Service Department,

Consulting Section, Second Product Development Department, First Section

dwango's infrastructure

・VMware vSphere

, AWS

, bare metal

, some Azure/OpenStack, etc.

HashiCorp Tools at dwango

・Used to create Packer

and Vagrant boxes

・Also used to create AMIs due to the increase in AWS environments

・Used as Consul

and Service Discovery

・Rewrites application configuration files when the MySQL server goes down

・Used as KVS and health check

・Used as a dynamic inventory for Ansible playbooks

・Used for configuration management of Terraform

and AWS environments

・Used by Niconico Surveys, friends.nico, Niconare, and part of N Preparatory School

CI with Terraform (Tools Used)

・github

・Jenkins

・Terraform

・tfenv

CI (flow) with Terraform

1. A PR is issued.

2. In Jenkins, plan -> apply -> destroy the pr_test environment.

3. If 2 passes, plan -> apply to the sandbox environment.

4. If all passes, merge.

The good things about CI with Terraform

・You can see whether the apply was successful or failed on github

. ・The environment is configured as dev/prod, so you can check in advance that the apply can be performed safely.

・Using tfenv, you can easily check when upgrading terraform itself.

CD with Terraform

・Once CI passes, we want to automatically deploy to dev, etc.

・We've created a job in Jenkins to ensure safety

・Deployments can now be done without any issues, regardless of who is working on it

・Results are notified via Slack, so it's easy to check

Impressions

・Packer is definitely the best for creating AMIs.

・We use Ansible at our company, so we want to use Consul as a dynamic inventory.

・Switching tfenv versions is convenient.

・Even if the plan passes, terraform can sometimes fail with apply, so I thought deploying to the pr_test environment would be a good way to go.

・For CD, Jenkins is the best. It's easier and more reliable to create a job than to execute commands directly.

.... That's the summary, mostly bullet points.

We would like to actively use HashiCorp tools to realize IaC at our company as well!

0

0