[Introduction to Scraping] Obtaining table data on a website using Python

table of contents

Hello, nice to meet you.

I'm Kawai from the System Solutions Department and I've been really into Kirby Discovery lately.

It's already hot, but spring is here! I've started seeing people dressed like new employees on my commute (a long time ago).

This time, I'll be writing an article about Python scraping, which may be a little helpful for new employees in their work.

What is scraping?

Data analysis has been gaining attention in recent years, and scraping is one of its fundamental techniques, primarily used to obtain targeted data from websites.

The word scrape originally meant "to scrape together," and the term seems to have been derived from this.

In this article, we will use the programming language Python to automatically retrieve table information within a page

Preparation

If Python is not installed,

download and install the package for your operating system from https://www.python.org/downloads/

install the libraries we will use this time, " BeautifulSoup4 " and " html.parser

This article assumes a Windows environment, so open the command prompt by pressing [CTRL] + [R], entering [cmd], and executing the following command. The installation will begin

pip install bs4 html.parser

This time, I would like to try to automatically obtain information such as domains and IP addresses used in Office 365 from the following Microsoft page and then write it out to a CSV file (it is quite a hassle to obtain this information manually)

"Office 365 URLs and IP Address Ranges" > "Microsoft 365 Common and Office Online"

https://docs.microsoft.com/ja-jp/microsoft-365/enterprise/urls-and-ip-address-ranges?view=o365-worldwide

Operating environment and full code

Operating system: Microsoft Windows 10 Pro

Python version: 3.10

from bs4 import BeautifulSoup from html.parser import HTMLParser import csv from urllib.request import urlopen headers = {"User-Agent": "Mozilla/5.0 (X11; Linux x86_64; rv:61.0) Gecko/20100101 Firefox/61.0"} html = urlopen("https://docs.microsoft.com/ja-jp/microsoft-365/enterprise/urls-and-ip-address-ranges?view=o365-worldwide") bsObj = BeautifulSoup(html, "html.parser") table = bsObj.findAll("table")[4] rows = table.findAll("tr") with open(r"C:\Users\python\Desktop\python\2022\microsoft.csv", "w", encoding="cp932", newline="") as file: writer = csv.writer(file) for row in rows: csvRow = [] for cell in row.findAll(["td", "th"]): csvRow.append(cell.get_text()) writer.writerow(csvRow)

Code explanation

from bs4 import BeautifulSoup from html.parser import HTMLParser import csv from urllib.request import urlopen

Import each library

headers = {"User-Agent": "Mozilla/5.0 (X11; Linux x86_64; rv:61.0) Gecko/20100101 Firefox/61.0"} html = urlopen("https://docs.microsoft.com/ja-jp/microsoft-365/enterprise/urls-and-ip-address-ranges?view=o365-worldwide") bsObj = BeautifulSoup(html, "html.parser")

→ Add user agent information (we'll use Firefox in this example).

Specify the page you want to open with urlopen, and declare it here so that BeautifulSoup can read it.

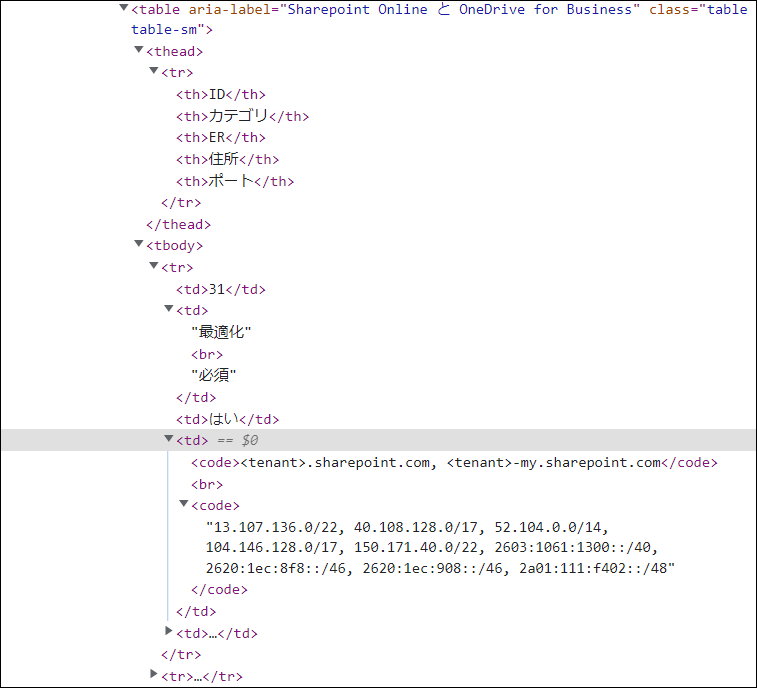

table = bsObj.findAll("table")[4] rows = table.findAll("tr")

→ Based on the HTML structure (using the web developer tools of each browser), specify [4] as the fourth table. Also, use findAll to find the "tr" tag

with open(r"C:\Users\python\Desktop\python\2022\microsoft.csv", "w", encoding="cp932", newline="") as file: writer = csv.writer(file) for row in rows: csvRow = [] for cell in row.findAll(["td", "th"]): csvRow.append(cell.get_text()) writer.writerow(csvRow)

→ Specify the character code, etc. with the desired path and file name (if the file does not exist, it will be created in the specified path)

You can write with "w" and write the retrieved information with "newline="" by starting a new line for each column.

Search for td and th within the rows (tr tag) specified in the above column, obtain the values of those columns using a loop, and write them to a CSV file.

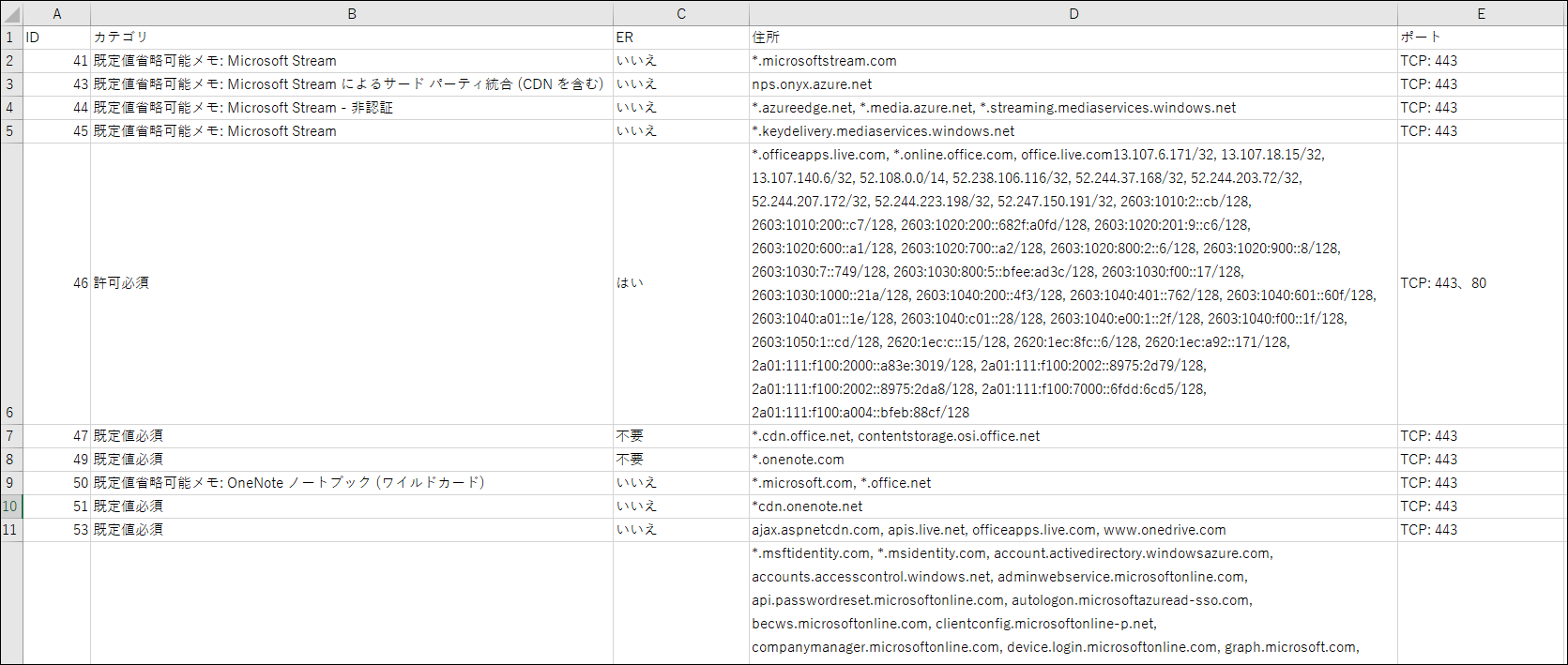

Output results

This is what I got. This time, I only got a few pieces of information, but the more information you get, the more efficient it will be

If I have another opportunity, I would like to write an article that will be useful to someone

8

8