About the MySQL-compatible distributed SQL database "TiDB" [OSS]

table of contents

This is Ohara from the Technical Sales Department

I will write about the open source (OSS) distributed SQL database " TiDB"

TiDB Overview

■ TiDB open source developer PingCAP and is currently managed by the CNCF

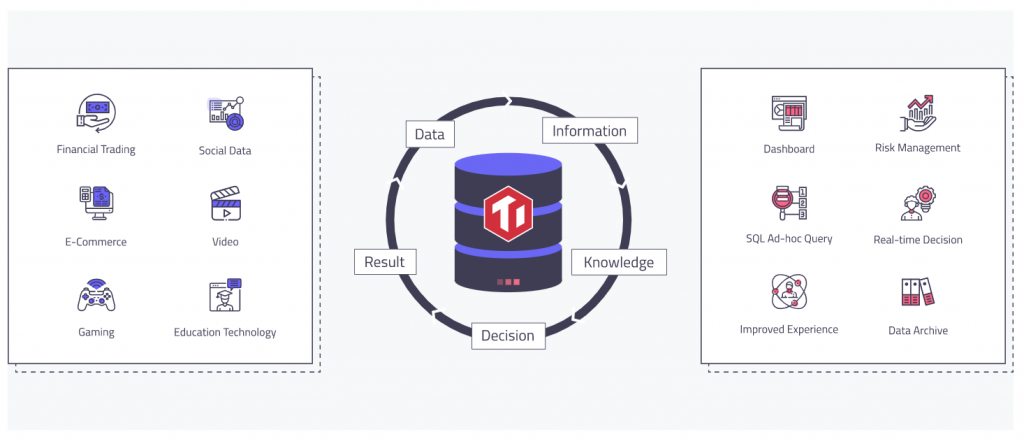

■ TiDB is an open-source NewSQL database that supports HTAP (Hybrid Transactional and Analytical Processing) workloads

■It is compatible with MySQL and features horizontal scalability, strong consistency, and high availability. It covers OLTP (online transaction processing) and OLAP (online analytical processing) with HTAP, and is suitable for a variety of use cases that require high availability and strong consistency with large-scale data

■ As an example of its adoption by a Japanese company, it is also used in the infrastructure of PayPay, a company that provides QR payment services

◇ Quote: Payment platform engineer supporting transactions

TiDB Features

Horizontally distributed scale-out/scale-in

The TiDB architecture design separates compute from storage, allowing compute and storage capacity to be independently scaled out and in online as needed

Multi-replica and high availability

- Replicas that store data on multiple replicas use the Multi-Raft protocol to retrieve transaction logs

Because transactions only commit if the data has been successfully written to a majority of replicas, this provides strong consistency and availability guarantees in the event that a minority of replicas go down

You can configure the number of regions and replicas as needed to meet the requirements of various disaster tolerance levels

Real-time HTAP

TiDB provides two storage engines: TiKV , a row-based storage engine, and TiFlash

TiFlash uses the Multi-Raft Learner protocol to replicate data from TiKV in real time and ensure data consistency between the TiKV row-based storage engine and the TiFlash columnar storage engine

TiKV and TiFlash can be deployed on different machines as needed to solve HTAP resource isolation issues

Cloud-native distributed database

TiDB is a distributed database designed for the cloud, providing flexible scalability, reliability, and security on cloud platforms, allowing users to flexibly scale TiDB to meet their workload requirements

TiDB has at least three replicas of each data set, which can be scheduled in different cloud availability zones to tolerate an entire data center outage

TiDB Operator helps you manage TiDB on Kubernetes and automates the tasks associated with operating a TiDB cluster, making it easy to deploy TiDB on clouds that offer managed Kubernetes.

TiDB Cloud fully manages TiDB itself , allowing you to deploy and run a TiDB cluster in the cloud with just a few clicks.

*TiDB Cloud is a managed service (paid service) offered within cloud platforms such as AWS, Azure, and GCP.

Compatible with MySQL 5.7 protocol and the MySQL ecosystem

TiDB is compatible with the MySQL 5.7 protocol, common MySQL features, and the MySQL ecosystem, so migrating existing applications to TiDB requires only minor code changes, not large ones

TiDB also has data migration tools

TiDB Architecture

◇ Quote: TiDB Architecture

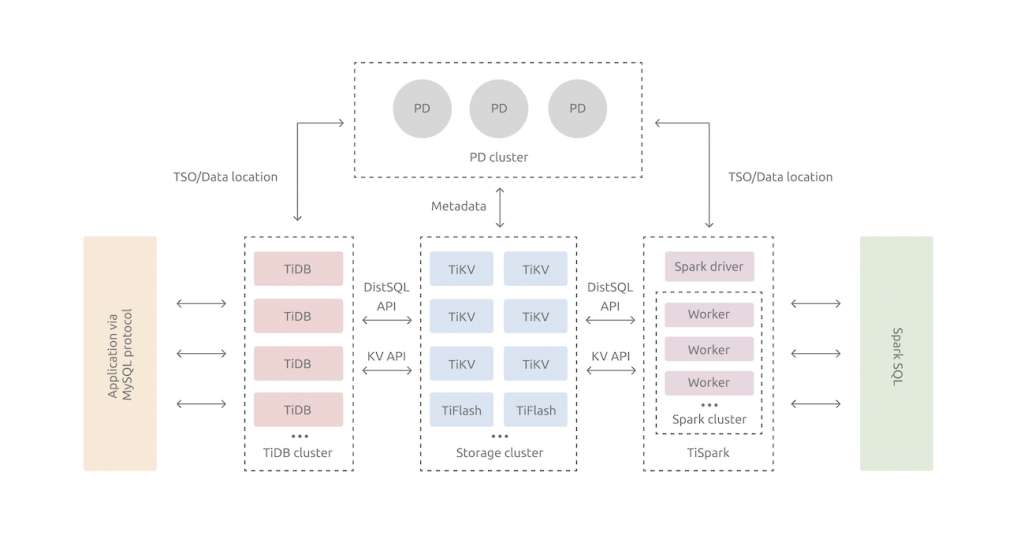

As a distributed database, TiDB is designed to consist of multiple components that communicate with each other to form the complete TiDB system

TiDB Server

The TiDB server is a stateless SQL layer that exposes a connection endpoint for the MySQL protocol to the outside world. The TiDB server receives SQL requests, performs SQL analysis and optimization, and finally generates a distributed execution plan

It is horizontally scalable and provides a unified interface to the outside world through load balancing components such as Linux Virtual Server (LVS), HAProxy, and F5. It does not store data and is dedicated to computing and SQL analysis, sending actual data read requests to TiKV nodes (or TiFlash nodes)

PD (Placement Driver) Server

The PD Server is a component consisting of at least three nodes that is responsible for managing metadata for the entire cluster

It stores the real-time data distribution metadata of every single TiKV node and the topology structure of the entire TiDB cluster, provides the TiDB dashboard management UI, and assigns transaction IDs to distributed transactions

The PD server not only stores cluster metadata, but also sends data scheduling commands to specific TiKV nodes according to the data distribution state reported by the TiKV nodes in real time

Storage Server

◇ TiKV Server

- TiKV is a distributed transactional key-value storage engine, and the TiKV server is responsible for storing data

Each region stores data for a specific key range, which is a left-closed and right-open interval between StartKey and EndKey, and each TiKV node has multiple regions. The TiKV API provides native support for distributed transactions at the key-value pair level and supports snapshot isolation level by default

After processing the SQL statement, the TiDB server translates the SQL execution plan into actual calls to the TiKV API. Data is stored in TiKV, and all data in TiKV is automatically maintained across multiple replicas (three by default), making TiKV natively highly available and supporting automatic failover

◇ TiFlash Server

TiFlash servers are a special type of storage server. Unlike regular TiKV nodes, TiFlash stores data column-wise and is primarily designed to accelerate analytical processing

summary

It is a highly available service that is open source (OSS), horizontally distributed, and MySQL compatible, so it may be interesting to consider it as a database for web services such as social games and e-commerce sites

6

6