Best value for money? Benchmarking AMD instances on GCP

table of contents

hello everyone

My name is Kanbara and I work in the System Solutions Department.

I joined Beyond as a new graduate, but I haven't written a blog in ages, and before I knew it, it's been three years.

This is my first blog post.

This may come as a surprise, but I've been personally enthusiastic about AMD CPUs for the past few years.

The first Zen architecture CPU was released in 2017, and its high multi-threaded performance became a hot topic.

Since then, improved architecture CPUs have been released one after another, such as Zen+ and Zen2. In particular, the Zen3 architecture CPU announced last October significantly improved single-threaded performance as well as multi-threaded performance, making it extremely powerful in many ways.

Kanbara was also enthusiastic about using an AMD CPU for his next PC build, but due to various circumstances, he hasn't been able to build one yet. I want a 5800X.

Now, CPUs that use AMD's Zen microarchitecture are also being developed for servers.

Server products are sold under the brand name AMD EPYC, and our company's Ohara has summarized the features of EPYC in a blog post , so please take a look if you're interested.

Servers/instances that use EPYC CPUs are available on various cloud platforms, including AWS.

In this article, we will launch an EPYC Compute Engine instance on GCP and benchmark it to compare it with an instance that uses Intel Xeon.

AMD EPYC on GCP Compute Engine

Google Compute Engine offers EPYC across multiple machine types

- E2 VM

- N2D VM

- Tau T2D VM

For the benchmark, we will use the N2D VM. N2D is the AMD EPYC version of the N2 VM, which uses Intel Xeon, and was selected because it makes it easier to compare CPU differences

Instance selection

First, select an instance for benchmarking

The EPYC instance machine type is N2D VM, with the following specs and configuration. EPYC also has generations, and the official documentation , the second-generation EPYC Rome is available for N2D VMs. The latest EPYC is the third-generation Milan.

| CPU Brand | AMD EPYC Rome |

| vCPU | 2 |

| Memory | 8GB |

| Disk | Balanced persistent disk 20GB |

| OS Image | centos-7-v20211105 |

The competing Intel Xeon instance is an N2 VM with the following specs and configuration. However, since there would be no comparison if the specs were unbalanced, I made it exactly the same as the EPYC instance except for the CPU brand. The Xeon generation appears to be either Ice Lake or Cascade Lake, but in either case it's a fairly new generation

| CPU Brand | Intel Xeon (Ice Lake or Cascade Lake) |

| vCPU | 2 |

| Memory | 8GB |

| Disk | Balanced persistent disk 20GB |

| OS Image | centos-7-v20211105 |

This time we will run benchmarks and compare the performance with this configuration

Checking the CPU

Once the instance is up and running, log in via SSH

GCP has SSH integrated into the console, so you can easily log in to the server from your browser, which is convenient. For disposable use like this, it's easy because you don't have to worry about preparing keys, etc

After logging in via SSH, I'll try typing lscpu. First, the EPYC instance

# lscpu Architecture: x86_64 CPU op-mode(s): 32-bit, 64-bit Byte Order: Little Endian CPU(s): 2 On-line CPU(s) list: 0,1 Thread(s) per core: 2 Core(s) per socket: 1 Socket(s): 1 NUMA node(s): 1 Vendor ID: AuthenticAMD CPU family: 23 Model: 49 Model name: AMD EPYC 7B12 Stepping: 0 CPU MHz: 2249.998 BogoMIPS: 4499.99 Hypervisor vendor: KVM Virtualization type: full L1d cache: 32K L1i cache: 32K L2 cache: 512K L3 cache: 16384K NUMA node0 CPU(s): 0,1 Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ht syscall nx mmxext fxsr_opt pdpe1gb rdtscp lm constant_tsc art rep_good nopl nonstop_tsc extd_apicid eagerfpu pni pclmulqdq ssse3 fma cx16 sse4_1 sse4_2 movbe popcnt aes xsave avx f16c rdrand hypervisor lahf_lm cmp_legacy cr8_legacy abm sse4a misalignsse 3dnowprefetch osvw topoext retpoline_amd ssbd ibrs ibpb stibp vmmcall fsgsbase tsc_adjust bmi1 avx2 smep bmi2 rdseed adx smap clflushopt clwb sha_ni xsaveopt xsavec xgetbv1 clzero xsaveerptr arat npt nrip_save umip

The model name is AMD EPYC 7B12. You can also see the cache configuration.

The architecture is naturally x86_64, and the instruction set is compatible with Intel Xeon.

SIMD instructions are supported up to AVX2. However, since SIMD instructions themselves are only used in limited situations for general server applications, this may be sufficient.

Below are the results of running it on a Xeon instance

# lscpu Architecture: x86_64 CPU op-mode(s): 32-bit, 64-bit Byte Order: Little Endian CPU(s): 2 On-line CPU(s) list: 0,1 Thread(s) per core: 2 Core(s) per socket: 1 Socket(s): 1 NUMA node(s): 1 Vendor ID: GenuineIntel CPU family: 6 Model: 85 Model name: Intel (R) fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ss ht syscall nx pdpe1gb rdtscp lm constant_tsc rep_good nopl xtopology nonstop_tsc eagerfpu pni pclmulqdq ssse3 fma cx16 pcid sse4_1 sse4_2 x2apic movbe popcnt aes xsave avx f16c rdrand hypervisor lahf_lm abm 3dnowprefetch invpcid_single ssbd ibrs ibpb stibp ibrs_enhanced fsgsbase tsc_adjust bmi1 hle avx2 smep bmi2 erms invpcid rtm mpx avx512f avx512dq rdseed adx smap clflushopt clwb avx512cd avx512bw avx512vl xsaveopt xsavec xgetbv1 arat avx512_vnni md_clear spec_ctrl intel_stibp arch_capabilities

The model name is Intel(R) Xeon(R) CPU @ 2.80GHz, but the specific model number appears to be masked.

Additionally, an avx512 flag has been added to the Flags, indicating that the CPU supports AVX512 (512-bit SIMD instructions).

EPYC instances only support up to AVX2 (256-bit SIMD instructions), so it's likely to deliver high performance in processes (and programs) that use SIMD instructions, such as video encoding and image conversion.

Preparing for the benchmark

This time, we will use UnixBench as the benchmark software.

has published a very easy-to-understand article explaining UnixBench and the installation procedure

Both the EPYC and Xeon instances were booted from the same image (centos-7-v20211105), and a yum -y update was run before running the benchmark.

The kernel, GCC, and UnixBench versions used in the execution environment are as follows:

| Linux Kernel | 3.10.0-1160.49.1.el7.x86_64 |

| GCC | 4.8.5 20150623 (Red Hat 4.8.5-44) |

| UnixBench | Version 5.1.3 |

When UnixBench is run without any arguments, it will run a benchmark with a parallelism of 1, then continue with a parallelism of the number of logical CPUs (2 times in total)

In this case, we have specified 2vCPU as the instance specifications, so the number of logical CPUs is 2, and therefore the number of parallel processes is also 2

The results in the next section will include both the results for parallel number 1 and the results for parallel number 2

Benchmark Results

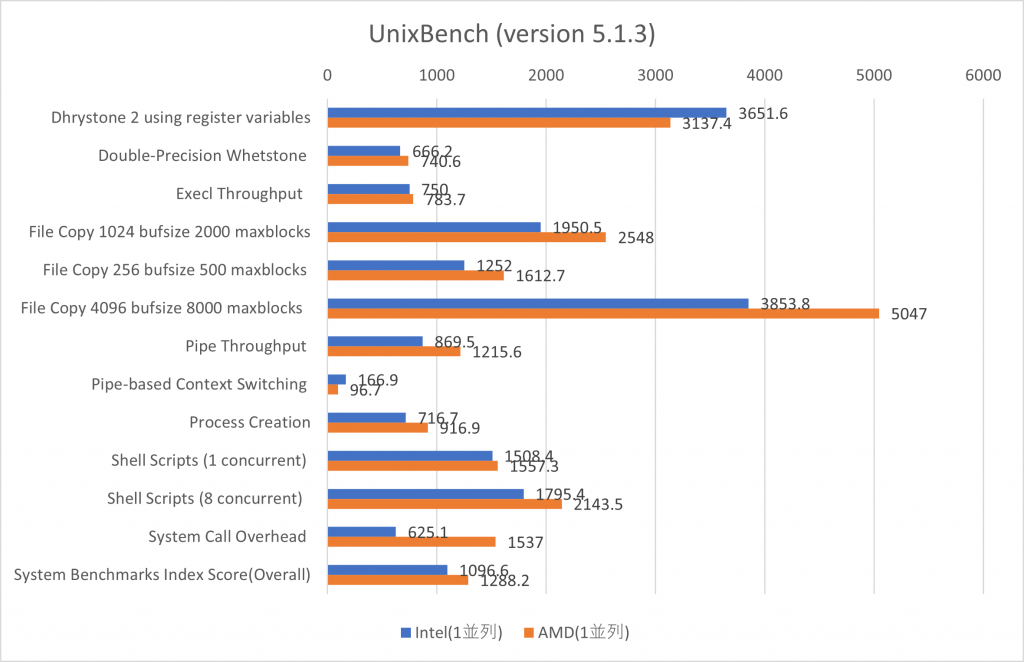

First, the results for parallelism 1

The blue bars represent the results for Xeon instances, and the red bars represent the results for EPYC instances, with larger values indicating better results

The System Benchmarks Index Score (Overall) at the bottom is the total benchmark score, and the other items are the results of individual tests

Looking at the results, the AMD instance performed better overall. The total score was 1288.2 for the AMD instance, compared to 1096.6 for the Xeon instance, showing a significant difference. Looking at individual items, it appears that the AMD instance performed particularly well in items such as System Call Overhead and File Copy

System call invocation and file copying are important indicators for server use, so it's great that these items have high performance

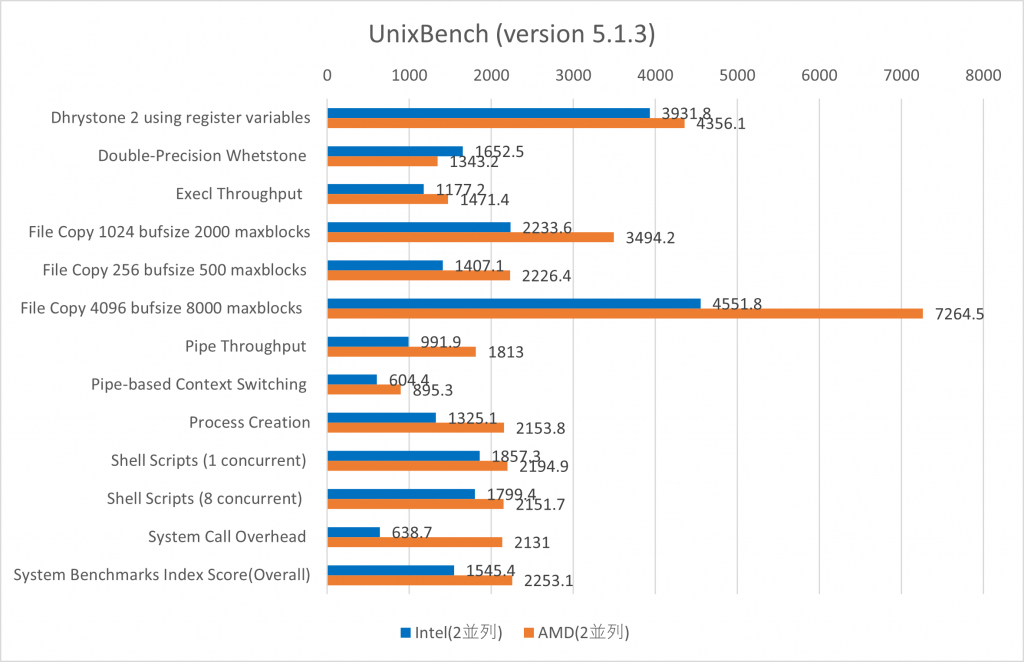

Next, the results for parallel number 2

As with parallelism 1, the blue bars are Xeon instances and the red bars are EPYC instances

Here too, EPYC instances have the advantage. Overall, EPYC instances perform better than Xeon instances

Another thing to note here is the total score, where the Xeon instance only managed 1545.4, while the EPYC instance improved its score to 2253.1

The Xeon instance with a parallelism of 2 achieved a score that was approximately 1.4 times higher than that of a parallelism of 1, while the EPYC instance with a parallelism of 2 achieved a score that was approximately 1.75 times higher than that of a parallelism of 1

Although this is only the result of running a single benchmark software called UnixBench, it appears that EPYC instances tend to have better parallelization efficiency than Xeon instances

Fee

While not directly related to benchmarks, EPYC instances are priced lower than Xeon instances

Based on the configuration and specifications used in this benchmark, a Xeon instance would cost approximately $74 per month, while an EPYC instance would be available for approximately $64 per month

summary

We launched an instance using AMD EPYC and an instance using Intel Xeon on GCP's Compute Engine and benchmarked them using UnixBench to compare them

These benchmarks show that EPYC instances offer excellent performance. They also offer excellent cost performance, so we recommend you consider them when building a server on GCP

Also, in this benchmark, the Intel Xeon instances performed poorly, but the results may be different in other benchmarks, and since the benchmark was only run once, it's possible that this was just a coincidence. Intel Xeon has a feature called AVX512 that EPYC doesn't have, so it's best to choose the instance that best suits your workload on a case-by-case basis

That's all. Thank you very much

2

2