Let's try using Google Cloud PHP Client, which allows you to easily operate various GCP services

Hello,

I'm Mandai, the Wild Team member of the development team.

Like AWS, GCP also has client libraries that support various programming languages

This is a very handy tool that allows you to access various GCP services in the same way.

Also, because it's integrated, the usability is consistent across services, so once you learn it, you won't get lost. The more you use it, the more you benefit from it.

This time, I'll be using the PHP client library.

Install using composer

It can be installed using composer, so it's very easy to set up.

GCP client libraries are modularized for each service, so you just need to install the library for the service you need.

In the example below, we are installing the library for Cloud Storage

composer require google/cloud-storage

This completes the installation. All you

need to do is create a service account using your authentication information (described later) and you'll be ready to use it.

There are other libraries for each service, so I have summarized them in a table

| Service Name | Module Name | remarks |

|---|---|---|

| Cloud Storage | google/cloud-storage | |

| Cloud Datastore | google/cloud-datastore | |

| Cloud BigQuery | google/cloud-bigquery | |

| Cloud Spanner | google/cloud-spanner | Beta |

| Cloud Vision | google/cloud-vision | |

| Cloud Translate | google/cloud-translate | |

| Cloud Speech | google/cloud-speech | |

| Cloud Natural Language | google/cloud-language | Some features are in beta |

| Google App Engine | google/cloud-tools | Working with Docker images for Flex environments |

| Cloud Pub/Sub | google/cloud-pubsub | |

| Stackdriver Trace | google/cloud-trace | |

| Stackdriver Logging | google/cloud-logging | |

| Stackdriver monitoring | google/cloud-monitoring | |

| Stackdriver Error Reporting | google/cloud-error-reporting | |

| Video Intelligence | google/cloud-videointelligence | Beta |

| Cloud Firestore | google/cloud-firestore | Beta |

| Cloud Data Loss Prevention | google/cloud-dlp | Data Loss Prevention API Early Access Program participants only |

| Bigquery Data Transfer Service | google/cloud-bigquerydatatransfer | Private free trial |

- All of them can be installed and used via composer

- Data as of January 18, 2018

Create a service account

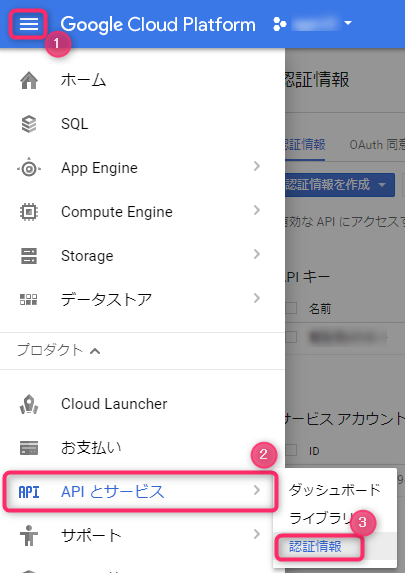

Service accounts can be created from the GCP cloud console

by going to the authentication information screen from the menu.

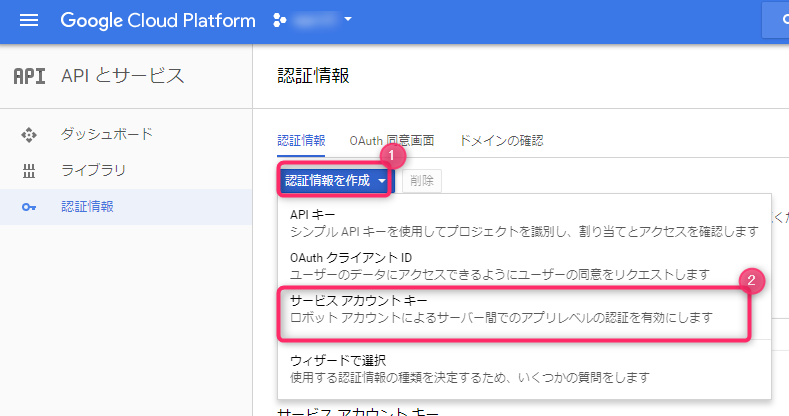

Next, click the "Create Credentials" button and select the service account key

To create a new service account, select "New Service Account"

You can name the service account anything you like, as long as it's easy to manage

There are multiple roles depending on the service, so there is no one specific one, but the "Owner/Administrator" role has the highest level of authority and has the authority to perform all operations within that service. If you find this troublesome, this is the one for you

For accounts issued to developers, assign a role with read and write permissions.

You can assign multiple roles.

For example, for Datastore, you can assign roles such as User and Index Manager.

The service account ID is formatted like an email address, and you can set any string before the @

The key type P12 is difficult to handle from PHP, so select "JSON"

After entering the above information, click the Create button.

Once created, a JSON file containing your authentication information will begin downloading.

This JSON file is very important, so please handle it with care

This completes the creation of the service account

Sample Program

I remember being troubled by the fact that the sample program in the GCP documentation did not include the section for reading authentication information, so I will present a sample program below that covers everything from setting authentication information to actually uploading an image, assuming you are using Cloud Storage

<?php require './vendor/autoload.php'; use Google\Cloud\Core\ServiceBuilder; $keyFilePath = '../hogehoge.json'; $projectId = 'sample-123456'; $bucketName = 'new-my-bucket'; $uploadFile = './test.txt'; $gcloud = new ServiceBuilder([ 'keyFilePath' => $keyFilePath, 'projectId' => $projectId, ]); $storage = $gcloud->storage(); $bucket = $storage->createBucket($bucketName); // If using an existing bucket // $bucket = $storage->bucket($bucketName); $bucket->upload(fopen($uploadFile, 'r'));

The above example uploads a file called test.txt to the new-my-bucket bucket

here and here , they don't use the ServiceBuilder class, but personally I think it's quicker to use an instance created from the ServiceBuilder class, because you can extract instances for each service that have completed the authentication process.

The GCP authentication documentation says to type commands using an environment variable called "GOOGLE_APPLICATION_CREDENTIALS", but I personally prefer the method I introduced here because I think it requires less advance preparation to complete the process using only PHP.

summary

There are only benefits!

What do you think of the GCP service client libraries?

I was hoping there would be a client library for Cloud SQL, but it seems there isn't

There is no GCE version, but there is no benefit to using PHP for VM management in the first place.

I think the best thing to do is just use the gcloud command.

There are also clients for services that aren't even publicly available, such as Cloud Data Loss Prevention and Bigquery Data Transfer Service, but I think the speed at which the library is being developed is incredible

In particular, among GCP's storage services, the price of Cloud Storage is by far the cheapest, even for the highest-tier multi-regional plan (65% of the price of the next cheapest standard persistent disk), so if you are planning to deploy a system on GCP, you should actively use it without storing it locally from your program

That's all

0

0