How much free space can Dropbox's lossless JPEG compression tool, Lepton, free up?

table of contents

Hello,

I'm Mandai, the Wild Team member of the development team.

I tried various things using lepton, a lossless compression tool for JPEG files developed by Dropbox, to see how much image size can actually be compressed

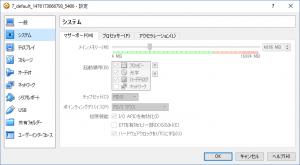

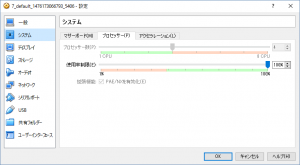

The OS used this time was CentOS 7, created on VirtualBox.

Please see the screenshot below for Windows system information and allocation on VirtualBox.

If you would like to create the same environment and try it out easily, this article to create a virtual environment.

It is a compilation environment-specific program, so please be aware that it may not compile properly on CentOS 6 or below.

(In fact, I was unable to compile it on a CentOS 6.7 VM despite trying various things.)

Installing lepton

Installing lepton is very easy

As written on github, run the included autogen.sh to download the dependent modules used for the build, then use autoreconf to create the build environment.

If autogen.sh fails, try installing automake and autoconf with yum.

git clone https://github.com/dropbox/lepton.git sh autogen.sh ./configure make -j4 sudo make install

Running make on a multi-core PC is much faster, so it's better to add the "-j" option when making.

The above command was run on a PC with a 4-core CPU.

The number of CPU cores can be obtained using the nproc command, so it's possible to do something like "make -j$(nproc)" (wild!)

How much reduction can be achieved?

Now it's time for the long-awaited conversion

Let 's start by cooking this

The file size is 5,816,548 bytes, or just over 5 MB. For a JPEG image, I think it's pretty large

The command to convert this to lepton format is as follows:

time lepton IMGP2319.JPG compressed.lep lepton v1.0-1.2.1-35-ge230990 14816784 bytes needed to decompress this file 4349164 5816548 74.77% 15946404 bytes needed to decompress this file 4349164 5816548 74.77% real 0m1.102s user 0m2.676s sys 0m0.053s

The time command is added just to know the execution time, so actually just "lepton IMGP2319.JPG compressed.lep" would be sufficient.

The result shows that the file was compressed to 74.77% of its original size.

The output of ls is as follows:

ls -al -rw-rw-r--. 1 vagrant vagrant 5816548 Oct 11 09:33 IMGP2319.JPG -rw-------. 1 vagrant vagrant 4349164 Oct 11 09:49 compressed.lep

I think it's generally handled without any problems

Now, let's put it back to the original

time lepton compressed.lep decompressed.jpg lepton v1.0-1.2.1-35-ge230990 15946404 bytes needed to decompress this file 4349164 5816548 74.77% real 0m0.370s user 0m1.235s sys 0m0.022s ls -al -rw-rw-r--. 1 vagrant vagrant 5816548 October 11 09:33 IMGP2319.JPG -rw-------. 1 vagrant vagrant 4349164 October 11 09:52 compressed.lep -rw-------. 1 vagrant vagrant 5816548 October 11 09:54 decompressed.jpg

The original image is IMGP2319.JPG, and after converting it to lepton format, the result is "decompressed.jpg" after reconverting it to jpeg

The file size is the same, and even if you run a diff, it turns out to be the exact same file

However, be aware that permissions have changed

Converting a large number of images (jpeg → lep format)

We converted a large number of images to investigate how much file size could be reduced on average

First, from jpeg to lep format

Lepton processed 159 files with a total size of 807MB.

The total time it took to convert these files was 569.686 seconds, with an average of 3.582931 seconds.

The file size was 826,028 bytes in total, and JPEG files were converted to 627,912 bytes in LEP format.

The average compression rate was 23.98%, which is the official rate of 22%, so the results were as expected.

The file with the best compression rate achieved a compression rate of 29.58%.

Conversely, the file with the worst compression rate achieved

a compression rate of 21.23%. The median was 23.82%, so I think it's safe to say that on average, more than 22% compression was achieved (right?).

By the way, the CPU load seems to be distributed across each core, so there was no situation where only one CPU was highly utilized

Converting a large number of images (lep format → jpeg)

Next, try converting from lep format to jpeg

Let's convert the lep format file we just converted back to jpeg.

The total time it took to convert this was 232.158 seconds, with an average of 1.460113 seconds.

Again, this varies by file, but some files took as long as 1.992 seconds, while others

took as little as 1.246 seconds.

The median execution time was 1.436 seconds.

Conclusion

If you are using a service that stores a large amount of JPEG images, you can save money by converting them when saving them to AWS S3, etc.

However, the machine power required for compression and decompression is not insignificant.

The time required for conversion depends greatly on CPU performance and memory usage, so you will need to adjust it to suit your machine.

You can control memory, processes, and threads with options.

However, one feature not to be overlooked is that it seems possible to launch it to retrieve files via a Unix domain socket or by specifying a listening port. It seems that it is also intended to be used to

set up a separate lepton conversion server and simply convert files, so depending on your idea, it may be a great idea.

Lepton has already been put to practical use within Dropbox and is proving to be a success.

If you're having trouble with the file size of your JPEG images, why not give it a try?

That's all

0

0