Using AWS Lambda to automatically generate thumbnail images triggered by S3 image upload

![]()

table of contents

My name is Teraoka and I am an infrastructure engineer

The topic of this article is "Lambda," one of the AWS services.

AWS Lambda (Serverless Code Execution and Automatic Management) | AWS

By the way, it is pronounced "lambda". Where did the "b" in the middle go?

Putting that aside...

there are already many articles like this, and I'm not sure how many times I've rewritten this, but I tried

to tinker with it a bit as a form of independent study.

This time, I'll use lambda to create a thumbnail image. For more details, please see below ↓

■What is “lambda” in the first place?

Yes, let's start with an overview of lambda.

As usual, I will quote from the official AWS documentation (

What is AWS Lambda - AWS Lambda - AWS Documentation

AWS Lambda is a compute service that lets you run code without provisioning or managing servers

...I see.

can execute pre-registered code

using some kind of "event" as a trigger Also, because this event triggers the execution of processing asynchronously,

there is no need to keep EC2 instances running all the time.

This is why you don't need to provision or manage servers.

Now, the word "event" has been coming up a little while ago, but

to put it simply, it is something like this:

- A file was uploaded to an S3 bucket

it is possible to

automatically execute some process when a file is uploaded to an S3 bucket Of course, it does not require hitting the API on the EC2 instance.

It can be completed using only S3 and lambda.

Yes, if you have read the contents so far and the title of this blog, you may have guessed it, but

I would like to "automatically generate thumbnail images of images when files are uploaded to an S3 bucket"...

■The journey to creating thumbnail images

- Prepare an S3 bucket to upload images to

- Writing lambda functions

- Testing the function

- Setting up a lambda trigger

- Operation check

① Prepare an S3 bucket to upload images to

First, we need to prepare a container before we can get started, so let's quickly create a bucket in S3.

Please refer to the following article for creating a bucket and setting a policy.

I wrote about it before, so I'll take this opportunity to promote it (

②Writing the lambda function

Now, onto the main topic: writing a lambda function.

We will write the code to "automatically generate thumbnail images" and register it with lambda.

The code can be written in Python or Node.js, but

this time we will respect our personal preference and write it in Node.js (apologies to Python fans).

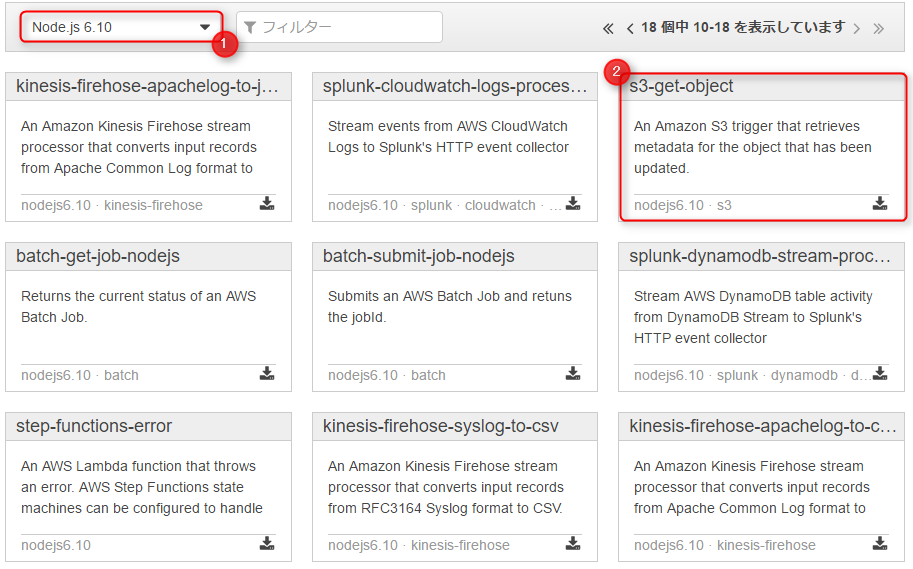

First, click the button that says "Create a Lambda Function," and you should see the following screen.

On this screen, select the blueprint for the lambda function.

They have prepared a template for your use, so I will use it gratefully.

First, select "Node.js 6.10" from the runtime selection.

As for the blueprint, I want to process S3, so I will use something called "s3-get-object".

When I click on the blueprint...

This will take you to the trigger settings screen

| bucket | Select the bucket you created in ① |

|---|---|

| Event Type | This time, we want to execute the function "when the image is uploaded", so we will select Put |

| prefix | This time we will upload directly to the bucket, so we will omit this |

| suffix | The function will only be executed on files whose file names end in "jpg" |

Do not check the Enable trigger box.

You will enable it manually after checking the operation of the function you will write later.

Click Next when you have finished entering the information.

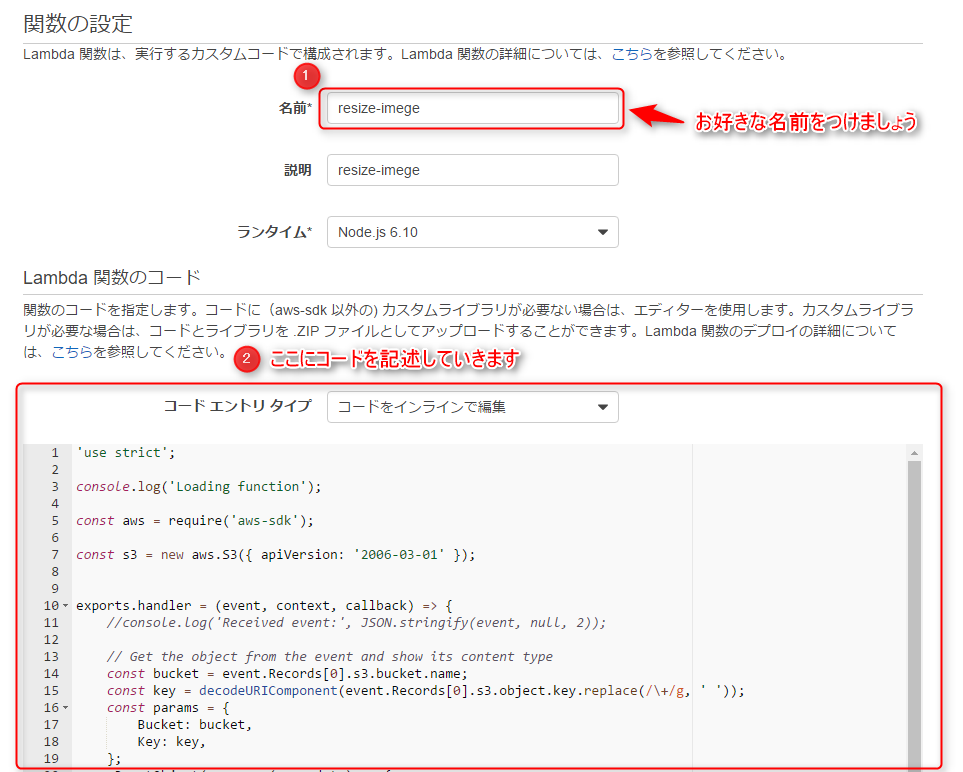

This is the screen where you will actually write the function.

There is a field to enter the function name, but you can use any name you like.

Since you selected "s3-get-object" on the blueprint selection screen,

the code to retrieve files from S3 is already written.

'use strict'; console.log('Loading function'); const aws = require('aws-sdk'); const s3 = new aws.S3({ apiVersion: '2006-03-01' }); exports.handler = (event, context, callback) => { //console.log('Received event:', JSON.stringify(event, null, 2)); // Get the object from the event and show its content type const bucket = event.Records[0].s3.bucket.name; const key = decodeURIComponent(event.Records[0].s3.object.key.replace(/\+/g, ' ')); const params = { Bucket: bucket, Key: key, }; s3.getObject(params, (err, data) => { if (err) { console.log(err); const message = `Error getting object ${key} from bucket ${bucket}. Make sure they exist and your bucket is in the same region as this function.`; console.log(message); callback(message); } else { console.log('CONTENT TYPE:', data.ContentType); callback(null, data.ContentType); } }); };

However, although you can retrieve files from S3 as is,

you cannot generate thumbnail images.

Let's add a few things to this code.

'use strict'; console.log('Loading function'); var fs = require('fs'); var im = require('imagemagick'); const aws = require('aws-sdk'); const s3 = new aws.S3({ apiVersion: '2006-03-01' }); exports.handler = (event, context, callback) => { const bucket = event.Records[0].s3.bucket.name; const key = decodeURIComponent(event.Records[0].s3.object.key.replace(/\+/g, ' ')); const params = { Bucket: bucket, Key: key, }; s3.getObject(params, (err, data) => { if (err) { console.log(err); const message = `Error getting object ${key} from bucket ${bucket}. Make sure they exist and your bucket is in the same region as this function.`; console.log(message); callback(message); } else { var contentType = data.ContentType; var extension = contentType.split('/').pop(); console.log(extension); im.resize({ srcData: data.Body, format: extension, width: 100 }, function(err, stdout, stderr) { if (err) { context.done('resize failed', err); } else { var thumbnailKey = key.split('.')[0] + "-thumbnail." + extension; s3.putObject({ Bucket: bucket, Key: thumbnailKey, Body: new Buffer(stdout, 'binary'), ContentType: contentType }, function(err, res) { if (err) { context.done('error putting object', err); } else { callback(null, "success putting object"); } }); } }); } }); };

Once added, it will look like this. The process

is the same up until the file is retrieved from S3, but

the retrieved image is resized using imagemagick and

the processed image is uploaded back to S3 as a thumbnail image.

Once you have written the code, you will need to configure the IAM Role as shown below.

We will skip this step and simply create a new Role automatically from a template.

A policy for manipulating S3 objects will be attached to the role created here, so

if you do not configure it correctly, the function will fail.

(Access Denied due to insufficient access permissions to S3 is a common occurrence.)

Clicking Next will take you to a confirmation screen, so press the "Create Function" button.

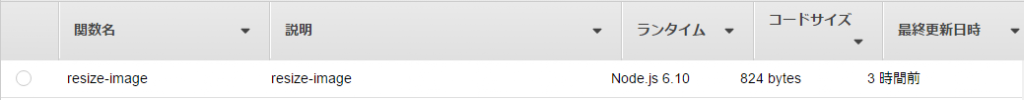

A lambda function will then be created.

The function you just created is displayed in the list

③ Function operation test

Test your function to make sure it works correctly

Select Set test event from the Actions menu at the top of the screen

This will take you to the test event input screen.

You write the test event in JSON format, and

think of this JSON as Lambda receiving it from S3 and executing the function.

In this example, we will upload an image file called test.jpg to an S3 bucket in advance,

and then test whether the Lambda function works correctly assuming that the image has been uploaded.

A template is also provided for this test event, so

let's use the S3 Put template.

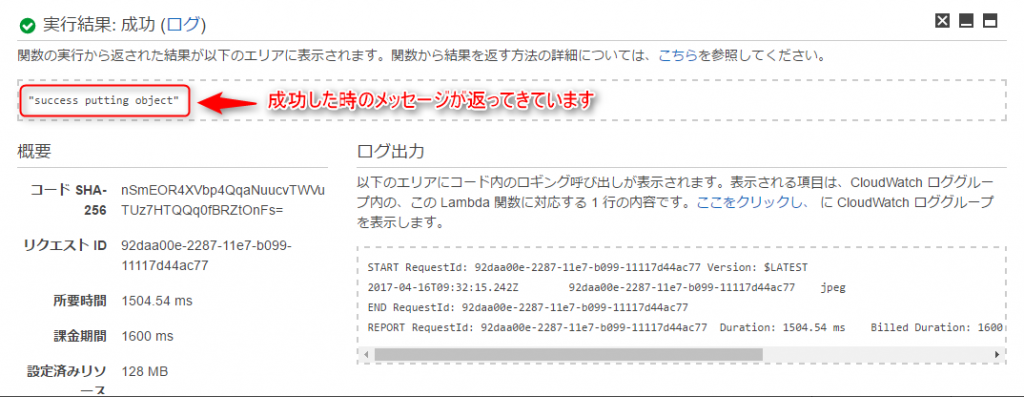

Enter the following and click Save and Test.

{ "Records": [ { "eventVersion": "2.0", "eventTime": "1970-01-01T00:00:00.000Z", "requestParameters": { "sourceIPAddress": "*" }, "s3": { "configurationId": "testConfigRule", "object": { "eTag": "0123456789abcdef0123456789abcdef", "sequencer": "0A1B2C3D4E5F678901", "key": "test.jpg", "size": 1024 }, "bucket": { "arn": "arn:aws:s3:::lambda-img-resize", "name": "lambda-img-resize", "ownerIdentity": { "principalId": "EXAMPLE" } }, "s3SchemaVersion": "1.0" }, "responseElements": { "x-amz-id-2": "EXAMPLE123/5678abcdefghijklambdaisawesome/mnopqrstuvwxyzABCDEFGH", "x-amz-request-id": "EXAMPLE123456789" }, "awsRegion": "ap-northeast-1", "eventName": "ObjectCreated:Put", "userIdentity": { "principalId": "EXAMPLE" }, "eventSource": "aws:s3" } ] }

A message indicating success was returned as the execution result!

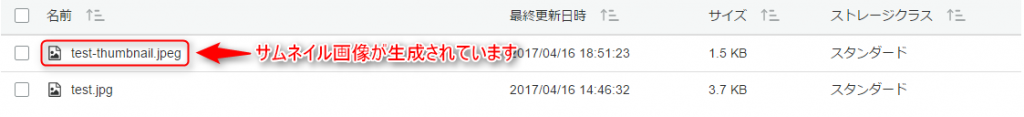

Let's check to see if there were any changes in the contents of the S3 bucket.

Yes, the thumbnail images are generated as if nothing had happened.

There seems to be no problem with the execution of the function itself.

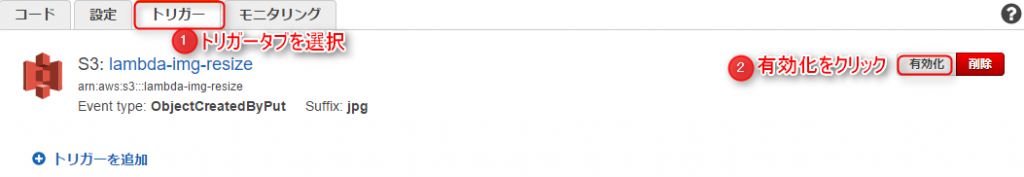

④Setting lambda trigger

Now we are finally at the final stage, where we will set up the trigger.

Up until now, this has only been a test, so

even if you upload a new image at this stage, a thumbnail image will not be created.

We need to set up a trigger so that the function will be executed automatically when an image is uploaded.

Click the Triggers tab from the lambda function we created.

The trigger itself was created when creating the function, so all that's left is to enable it.

Click Enable.

⑤ Operation check

Now let's actually upload an image to S3 and check it

...It's been created, that's wonderful..

Summary

What did you think?

It's a little complicated because you need to write code, but

it's convenient and interesting because you can do this much without needing EC2.

I only touched on it briefly this time, but I'd like to try something a little more advanced.

That's all, thank you.

0

0